Congratulations to CSE Assistant Professor Caiwen Ding who, in collaboration with Wujie Wen from Lehigh University and Xiaolin Xu from Northeastern University, was awarded a $1.2M NSF grant for “Accelerating Privacy-Preserving Machine Learning as a Service: From Algorithm to Hardware.” This research project focuses on the design of efficient algorithm-hardware co-optimized solutions to accelerate privacy-preserving machine learning on diverse hardware platforms.

Source: NSF

Machine learning (ML) as a service is being overwhelmingly driven by the ever-increasing clients’ intelligent data processing needs through the use of cloud servers, where powerful ML models are hosted. Although pervasive, out-sourced ML processing poses real threats to personal or business providers’ data privacy. For example, the clients either need to share their sensitive data, such as healthcare records, financial information, with the server, or the server has to disclose the model to the clients. To guarantee privacy, the rise of cryptographic protocols, such as Homomorphic Encryption (HE), Multi-Party Computation (MPC), enable ML analytics directly on the encrypted data. While enticing, there still exists a big gap between the theory and practice, e.g., long latency due to the prohibitively expensive computation or communication overhead over ciphertext. This project aims to practically accelerate the private ML service by offering a full-fledged development of efficient, scalable and encryption-conscious computing paradigms. The project’s novelties lie in new ML-specific cryptographic operators, accuracy-preserving and crypto-friendly neural architectures, and pioneered algorithm-hardware co-design methodologies. The project’s broader significance and importance are: (1) to advance trustworthy artificial intelligence (AI), one of the national strategic pillars of the National AI Initiative; (2) to deepen the understanding of interactions among cryptography, machine learning and hardware acceleration; (3) to enrich the computer engineering curriculum, and the training of students from diverse backgrounds through relevant programs at Lehigh University, Northeastern University, and the University of Connecticut.

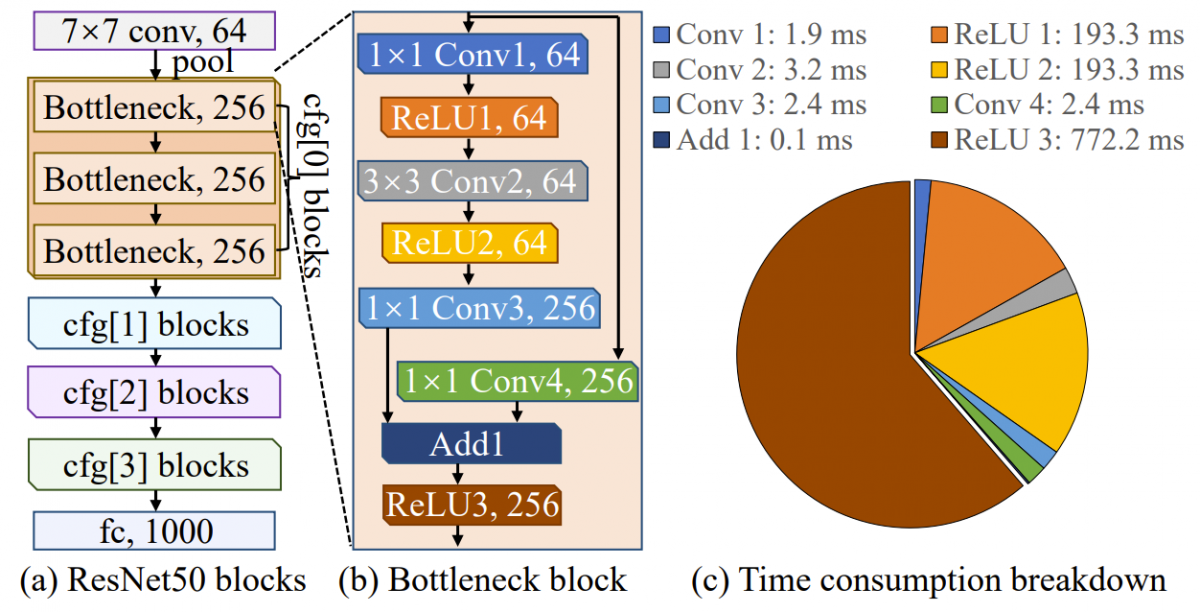

The project will develop a multifaceted design paradigm for efficient, scalable and practical algorithm-hardware co-optimized solutions to significantly accelerate privacy-preserving machine learning on hardware platforms such as FPGA. This project consists of three intervening research thrusts: (1) to orchestrate information representation and model sparsity in the encryption domain to fundamentally decrease the memory and computation footprint in the HE inference; (2) to overcome the ultra-high overhead associated with the MPC-based solution through techniques such as encryption-aware model truncation and partial hardware reconfiguration; (3) to search for crypto-friendly and accuracy-preserving neural architectures via jointly optimizing non-linear operation reduction, and closed loop “algorithm-hardware” design space exploration.